Indie game publisher Finji has publicly accused TikTok of using generative AI to alter its game advertisements without consent, resulting in content that the studio describes as sexually exploitative and racially offensive. The incident has reignited concerns over how automated ad systems operate on large platforms and how little control developers may have once their content enters those ecosystems.

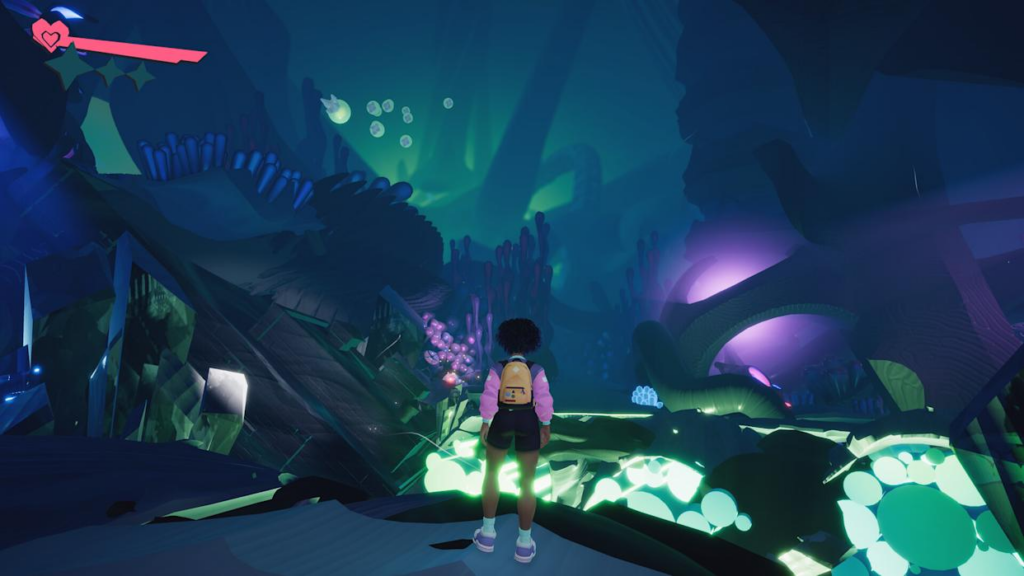

The controversy centers on promotional materials for Usual June, a narrative-driven indie game published by Finji. According to the studio, TikTok’s AI-powered ad tools transformed official game visuals into altered images that exaggerated the main character’s body and presentation. These versions sharply contradicted the original artistic intent and values of the game.

Finji learned about the issue after fans shared screenshots through Discord and social media. The images showed the protagonist redesigned in a hyper-sexualized style, including revealing clothing and distorted body proportions. Finji stated that these visuals also reinforced harmful racial and gender stereotypes, which made the situation even more serious.

Rebekah Saltsman, CEO and co-founder of Finji, explained that the studio had already disabled TikTok’s AI ad features such as Smart Creative and Automate Creative inside the ad manager. Despite those settings, the AI-generated ads still appeared under Finji’s official account name. As a result, viewers reasonably assumed the studio had approved the content.

At first, TikTok denied that AI tools had altered the ads. The platform claimed it found no evidence of automated creative changes, even after Finji submitted screenshots. However, following continued pressure, TikTok later acknowledged that the ads ran through its Catalog Ads system. This system combines images and videos automatically to optimize performance, which TikTok described as an experimental initiative.

Internal emails later confirmed that TikTok staff recognized the incident caused commercial and reputational harm to Finji. Even so, Finji says TikTok has not issued a formal public apology or outlined clear steps to prevent similar incidents. Saltsman stated that the company effectively closed the case without meaningful accountability.

This situation highlights a growing risk in automated advertising. Platforms increasingly prioritize engagement and scale, yet AI systems can override creator intent when they lack proper safeguards. For smaller studios, the damage can be severe. They often lack the leverage to challenge large platforms or recover quickly from public-facing mistakes tied to their brand.

Finji is known for publishing respected indie titles such as Tunic and Night in the Woods. The studio’s reputation rests heavily on thoughtful storytelling and ethical presentation. That made the AI-generated ads especially damaging in the eyes of both the developers and their community.

The broader indie scene has reacted with concern. Many developers worry that similar AI-driven changes could affect their own campaigns without warning. As ad platforms expand automation, calls grow louder for clearer consent systems, better transparency, and stronger opt-out protections.

For now, the case remains unresolved. It serves as a warning that AI efficiency can come at the cost of creative control and ethical responsibility. As generative tools spread across marketing pipelines, studios and platforms alike may need to rethink where automation should stop and human oversight must begin.

Origin: Engadget