Generative AI Search Prefers Less Popular Websites

Artificial intelligence is rapidly transforming how people access information. New research helps quantify one major difference between old and new search methods. The study shows that AI search engines tend to cite less popular websites. Often, these sources would not even appear in the top one hundred links of an organic Google search. Researchers from Ruhr published these key findings in a pre-print paper titled “Characterizing Web Search in The Age of Generative AI.” Their work analyzed the fundamental changes in how information is sourced.

The New Ranking Paradox

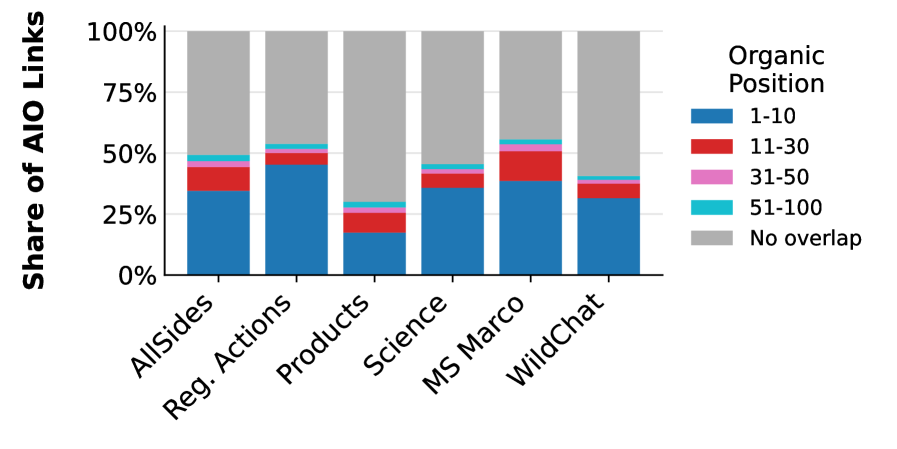

The study found a significant divergence in sourcing patterns. Traditional organic search engines prioritize domain authority and popularity. Conversely, AI engines frequently venture into more obscure corners of the internet.

Sources cited by the AI engines were much more likely to fall outside the most tracked domains. Specifically, they were more likely to fall outside both the Top 1000 and Top 1 million tracked domains. Google’s Gemini search particularly showed this tendency. The median source it cited fell outside the Top 1000 domains across all test results.

Furthermore, a large portion of the AI sourced content was absent from top traditional rankings. A full 53 percent of the sources cited by Google’s AI Overviews did not appear in the top ten Google links. Additionally, 40 percent of those cited sources did not appear in the Top 100 Google links for the exact same query. This suggests a complete shift in how information is prioritized by AI models.

Sourcing Types and Information Loss

The study also analyzed the types of sources generative models choose. Researchers found that GPT based searches were more likely to cite corporate entities and encyclopedias. Interestingly, they almost never cited social media websites. This indicates a preference for institutional and factual style sources.

However, this shift comes with a significant drawback for users across the globe. Generative engines tend to compress information. They sometimes omit secondary or ambiguous aspects that traditional search results retain. This was especially noticeable for ambiguous search terms. In these cases, organic search results provided substantially better overall coverage. This suggests a potential loss of nuanced data.

SEO Title: Shocking Study: AI Search Ignores Top Websites for Data

Metadata: New research reveals that AI powered search engines like Google’s AI Overviews and Gemini rely heavily on less popular websites. This is a significant shift from traditional search engine result rankings across the globe.

Slug: ai-search-engines-rely-on-unpopular-websites-study-finds

SEO Keywords: AI search engines, generative AI, Google AI Overviews, Gemini, web search, less popular sources, Tranco, organic search

Tags: Technology, AI, Search Engine, Research, Data

Generative AI Search Prefers Less Popular Websites

Artificial intelligence is rapidly transforming how people access information. New research helps quantify one major difference between old and new search methods. The study shows that AI search engines tend to cite less popular websites. Often, these sources would not even appear in the top one hundred links of an organic Google search. Researchers from Ruhr published these key findings in a pre-print paper titled “Characterizing Web Search in The Age of Generative AI.” Their work analyzed the fundamental changes in how information is sourced.

The New Ranking Paradox

The study found a significant divergence in sourcing patterns. Traditional organic search engines prioritize domain authority and popularity. Conversely, AI engines frequently venture into more obscure corners of the internet.

Sources cited by the AI engines were much more likely to fall outside the most tracked domains. Specifically, they were more likely to fall outside both the Top 1000 and Top 1 million tracked domains. Google’s Gemini search particularly showed this tendency. The median source it cited fell outside the Top 1000 domains across all test results.

Furthermore, a large portion of the AI sourced content was absent from top traditional rankings. A full 53 percent of the sources cited by Google’s AI Overviews did not appear in the top ten Google links. Additionally, 40 percent of those cited sources did not appear in the Top 100 Google links for the exact same query. This suggests a complete shift in how information is prioritized by AI models.

Sourcing Types and Information Loss

The study also analyzed the types of sources generative models choose. Researchers found that GPT based searches were more likely to cite corporate entities and encyclopedias. Interestingly, they almost never cited social media websites. This indicates a preference for institutional and factual style sources.

However, this shift comes with a significant drawback for users across the globe. Generative engines tend to compress information. They sometimes omit secondary or ambiguous aspects that traditional search results retain. This was especially noticeable for ambiguous search terms. In these cases, organic search results provided substantially better overall coverage. This suggests a potential loss of nuanced data.

The Power and Limits of Internal Knowledge

Generative AI search engines have one unique advantage over traditional search. They can seamlessly weave pre-trained “internal knowledge” with data culled from the web. The GPT-4o with Search Tool often demonstrated this. It provided direct responses based purely on its training data. This happened even without citing any external web sources.

However, this reliance on internal data becomes a serious limitation when searching for timely information. When researchers sought up to date data, the model often failed to search the web. Instead, it frequently responded with messages asking the user to provide more information. This confirmed that the AI can struggle when asked for details outside its fixed training cut off date.

Ultimately, the study quantifies the major shift in the information ecosystem. AI search relies on a fundamentally different definition of source reliability and relevance.

origin: Arstechnica