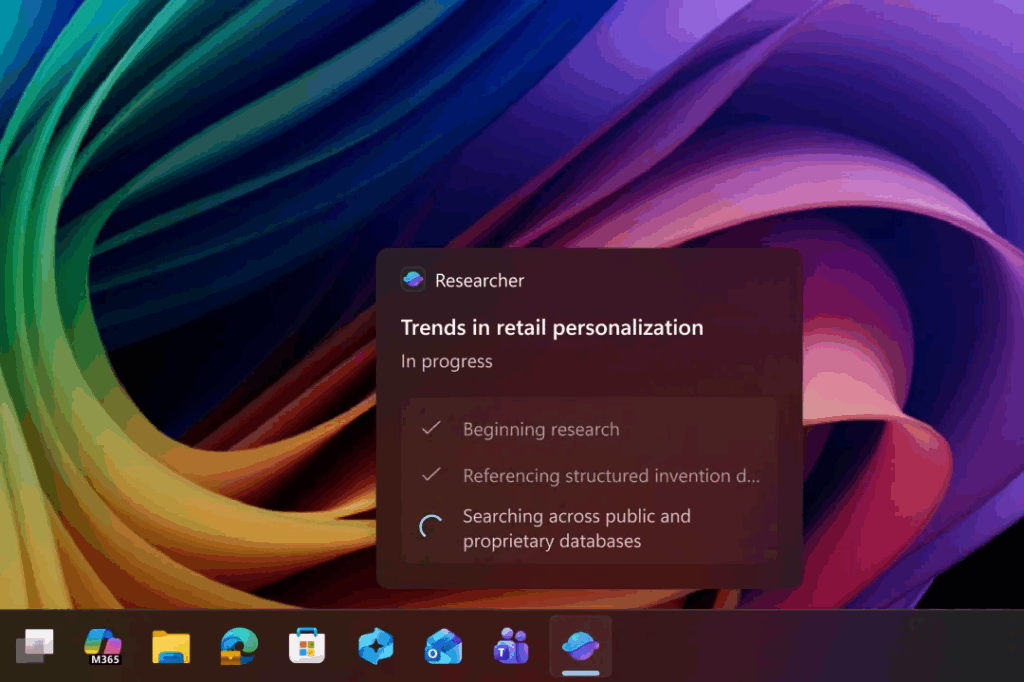

Microsoft has issued a serious warning regarding its Copilot Actions feature, an AI extension in Windows 11 that helps manage files, schedule meetings, and send emails on behalf of users. Security researchers have discovered that Copilot Actions is vulnerable to prompt injection attacks, where hackers can embed hidden instructions in documents or web pages, causing the AI to act unexpectedly, such as stealing sensitive information or downloading malware. Although the feature is designed to boost productivity, the underlying large language models present inherent risks. Microsoft acknowledged that agent-style AI behavior is unpredictable, leaving room for new cross-prompt injection attacks.

Experts are particularly concerned about hallucinations—instances where AI generates false information without basis—as well as prompt injection, which can steal data, execute harmful code, or even drain cryptocurrency from users. For example, instructions hidden in a seemingly normal Excel file or email can trick Copilot into summarizing content and sending data unknowingly. This vulnerability mirrors attacks on Mermaid diagrams in Microsoft 365 Copilot, which were patched in September, but questions remain about whether Microsoft is pushing new features without fully controlling risks.

Microsoft emphasizes that Copilot Actions is disabled by default and recommends enabling it only for advanced users to reduce the chance of externalized prompt injection attacks (XPIA). The company has implemented multiple safeguards, including prompt classifiers, markdown sanitization, and human-in-the-loop verification, although it has not clarified the exact level of expertise required for safe use. IT administrators remain concerned about governance in corporate environments.

Researchers compare this risk to macros in Microsoft Office highly vulnerable but often used out of necessity. Copilot Actions is dubbed a “supercharged version” because it has deeper system access but is harder to monitor. If widely deployed, administrators may struggle to maintain control, especially in environments using Intune or Entra ID. Microsoft is developing real-time detection tools in Copilot Studio to block suspicious activity before damage occurs.

Although Copilot Actions started as an opt-in experimental feature, history shows that Copilot features can become enabled by default without clear notification, leaving users to manage security themselves. Experts warn that relying on users to manage risk is unsustainable, particularly as AI is embedded in more products. Organizations should verify document sources and closely monitor AI outputs.

Microsoft maintains robust security measures, including logging AI behavior for audits, requesting permissions before accessing data, and ensuring GDPR compliance. The main challenge remains human oversight—users must approve actions correctly. As AI becomes increasingly integrated into Windows, inadequate protections could make Copilot Actions a significant vulnerability in agentic operating systems.

Source: Arstechnica