ChatGPT Voice Update Combines Speech and Text

OpenAI has rolled out its latest ChatGPT Voice update in late November 2025, integrating voice conversations directly into the main chat interface. Users can now speak commands, view responses in real time on the screen, review past messages, and receive images or maps all within a single window, eliminating the need to switch between voice and text modes.

Multimodal Integration for a Seamless Experience

Previously, ChatGPT began as a text-only system and later expanded to voice, improving convenience but still requiring users to type when viewing images or visual data. This created a sense of interacting with two separate chatbots. The latest update resolves this by combining voice, text, and images into one seamless multimodal interface. While occasional delays or missing maps may occur, it marks a major step forward in providing a more natural conversation experience.

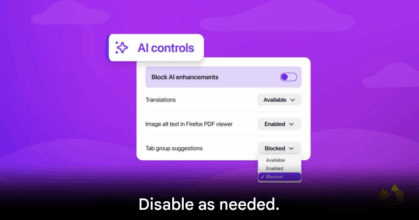

Many industry observers see this development as a clear example for Apple’s upcoming Siri, which is also moving in the same direction. Siri is evolving from a voice-only assistant to a multimodal agent capable of summarizing emails, managing cross-app information, and displaying visuals alongside responses. By merging voice and text seamlessly, Siri can deliver the experience Apple promises—speaking, showing images, and processing messages in a natural, fluid way.

Siri’s Future as a Smart Agent

The upgraded Siri is expected to become a more intelligent agent, capable of performing complex tasks like booking a flight from Heathrow to Las Vegas by integrating directly with travel apps. This goes far beyond typical question-and-answer functions and requires robust backend architecture, accurate app control, and context awareness.

Reports indicate that Apple may not fully develop this internally. They are close to a deal with Google to pay $1 billion annually for access to Gemini, a 1.2-trillion-parameter model, to power Siri in iOS 26.4 in Spring 2026. This approach differs from previous tests by OpenAI or Anthropic and will still use some in-house models in parallel.

At the end of the day, users care about two main things: data security through Private Cloud Compute that prevents personal information from training models, and a Siri that genuinely works smarter next year. Whether powered by a fine-tuned Gemini model or another partner, the priority is that it functions efficiently and reliably as expected.

THIS IS OUR SAY

This update highlights how conversational AI is bridging the gap between voice and text, showing SEA users that the future of assistants like ChatGPT and Siri will feel more like natural dialogue than ever before. The era of splitting modes is over—multimodal interaction is the new standard.

Origin: 9to5mac